[Previous lecture]

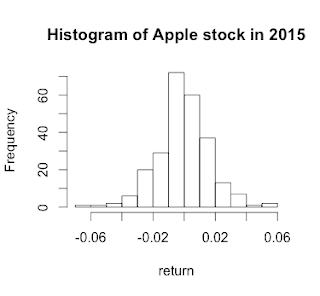

Getting stock volatility in R & Getting Histogram of returns

[Single Regression]

Single Regression is the most powerful tool to identify linear relationship between x and y, if we assume that there is a linear relationship between them. Basically, it is assumed that both have relationship like below.

y = ax + b

What we want to know is a and b. Then, we can predict y value with just x value. However, it doesn't explain which causes which. y could cause x or x could cause y. We don't know with single regression technique. Thus, this technique is not almighty. Please keep in mind that.

[The relationship between Exxon Mobile equity and WTI crude oil price]

Let's formulate a hypothesis first. In my mind, it is common sense that there is a positive linear (or log, square, whatever) relationship between WTI and Exxon Mobile equity value. As the oil price goes up, so does the profit of Exxon. We are going to validate our hypothesis through actual linear regression.

What we are going to do is

(1) Getting data from the internet

(2) Transform the data into the one that can be calculated

(3) Plot the scatter plot

(4) The single regression analysis

Let's take a look at the code.

[Code]

#Single Regression and Covariance

#Let's figure out the relationship between WTI and Stock price of exxon

#You can download the data from https://research.stlouisfed.org/fred2/series/DCOILWTICO/downloaddata

#I store the data in download folder you can change it.

library(tseries)

library(zoo) #Time series data type

xom <- get.hist.quote("XOM", #Tick mark

start="2015-01-01", #Start date YYYY-MM-DD

end="2015-12-31" #End date YYYY-MM-DD

)

#I am going to use close value only

xom_zoo <- xom$Close

#Plese download the file from stlouisfed

#Limit the range from 2015-01-01 to 2015-12-31 to compare it apple to apple

wti <- read.csv("/Users/seokbongchoi/Downloads/DCOILWTICO.csv")

#When it reads file first, it has categorical format we need to convert it.

wti$VALUE <- as.character(wti$VALUE)

#It also has garbage value "." in data. You can see in Excel. We can clean this with below command

wti <- wti[wti$VALUE!=".", #Get rid of any value that contains "."

1:2 #I need first column, and second column as well.

]

#Finally I want to convert character to numeric value.

wti$VALUE <- as.numeric(wti$VALUE)

#We need to convert the data into the time series

wti_zoo <- read.zoo(wti, format="%Y-%m-%d")

#What we need is return.

xom_rate <- (xom_zoo - lag(xom_zoo))/xom_zoo

wti_rate <- (wti_zoo - lag(wti_zoo))/wti_zoo

regression_result <- lm(xom_rate~wti_rate)

plot(y=xom_rate,

x=wti_rate,

pch=19, #I want to use dot

cex = .5, #The size of dot

main="The regression between Exxon & WTI in 2015",

ylab="Exxon return",

xlab="WTI return"

)

abline(regression_result, col="red") # regression line (y~x)

print(summary(regression_result))

[Output]

<Summary for regression>

Call:

lm(formula = xom_rate ~ wti_rate)

Residuals:

Min 1Q Median 3Q Max

-0.059637 -0.006658 0.000426 0.006674 0.038156

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.0003544 0.0007800 0.454 0.65

wti_rate 0.2483610 0.0264306 9.397 <2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.01235 on 249 degrees of freedom

Multiple R-squared: 0.2618,

Adjusted R-squared: 0.2588

F-statistic: 88.3 on 1 and 249 DF, p-value: < 2.2e-16

<Plot>

[Interpretation]

It is more important to know how to analyze the result of regression.

Estimate Std. Error t-value Pr(>|t|)

(Intercept)

0.0003544 0.0007800 0.454 0.65

wti_rate 0.2483610 0.0264306 9.397

<2e-16 ***

So we found the value of a and b

a = 0.2483610

b = 0.0003544

So, our equation has a form like,

y = 0.2483610 x + 0.0003544

Thus, the return on oil price has a linear relationship with the stock value of Exxon.

It is noted that p value is less than 2*10^-16. The rule of thumb is that if it is less than 0.05, this regression analysis can be reliable. p-value for constant is not significant.

Adjusted R square is 0.2588. 25.88% of the variability in this graph can be explained by our model. About 75% variability cannot be explained by this model, meaning that there are more variables needed to fully account for the equity value of Exxon (You can name it. Leverage ratio, revenue, profit, ...)

However, in the equity value, 25% variability accounts for really big chunk. We continue to this conversation for multi-regression analysis.

[Next Post]

Multi regression between Exxon Mobil stock and WTI/Natural Gas/S&P 500